Check out the video about the tool.

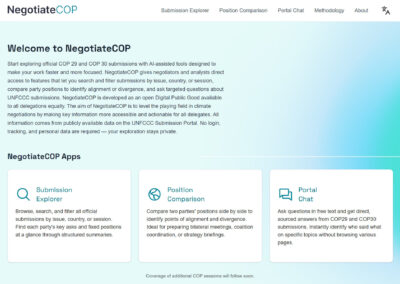

Right on time for the 30th annual Climate Conference (COP30) in Belém, we launched our new tool “NegotiateCOP” that assists delegates navigate the extensive volume of documents submitted to the UNFCCC submission portal by more than 190 attending countries. The large language model (LLM)-based tool entails three main features: Submission Explorer, Position Comparison and Portal Chat, all designed to assist with the labour-intensive preparations for complex negotiations. Especially small or under-resourced delegations without the support of large back offices can profit from the quick access and tangible overview NegotiateCOP provides.

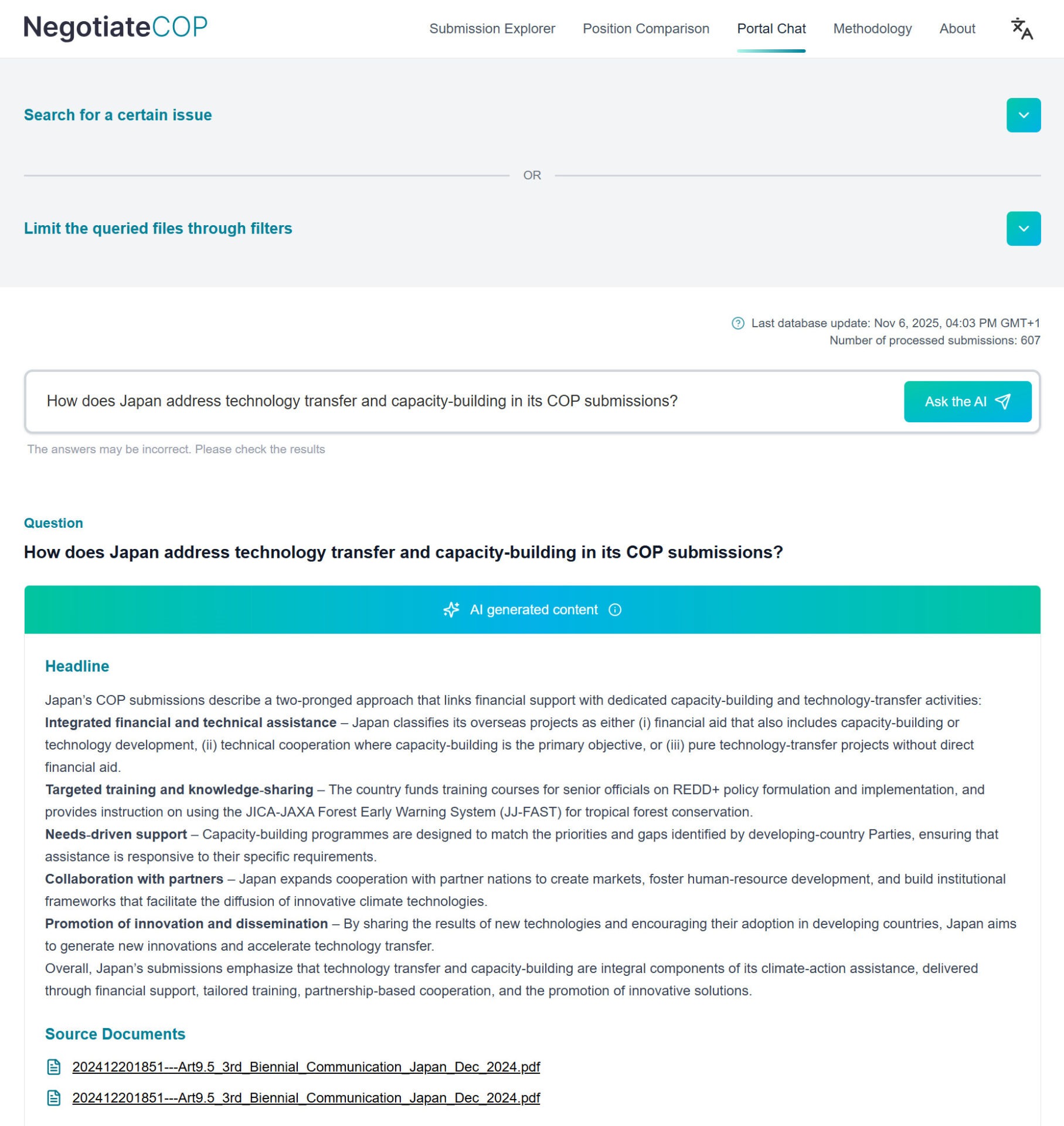

Landing page of NegotiateCOP

One Tool – Three Components

NegotiateCOP consists of three components which provide different access points and insights to the documents found on the UNFCCC submissions portal.

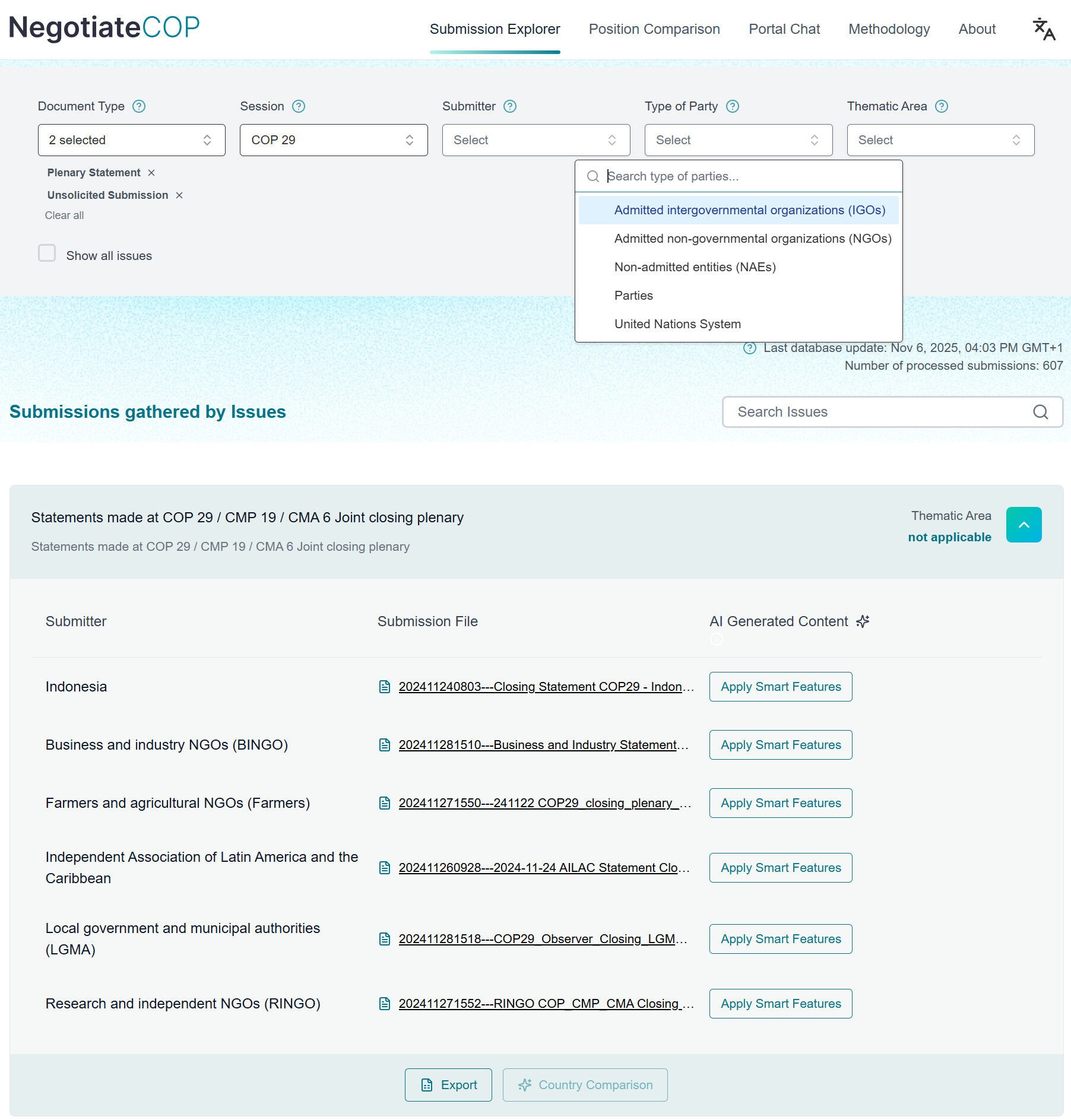

Submission Explorer: The Submission Explorer can be used to browse, search, and filter official submissions for COP29, COP30 and their subsidiary bodies by document type, session, submitter, type of party or thematic area. The submissions are organized in so-called “issues” linked to the thematic areas. Within a thematic area, many issues could be present, whereby each of them comes with corresponding party submitter uploads listed below the issue. The list of all found documents is shown and can be exported as table.

AI smart functions can be used for a selected submission to generate extended metadata. This function enables users to obtain a quick summary of the text content, or to read specific demands from the document in so-called “key asks” or “fixed positions”. The document types do not correspond to the official UNFCCC taxonomy but have been newly selected to enable consistent and AI-accessible classification. For example, we divide documents into “views submissions” or “plenary statements” to ensure an orderly structure at the content level. See the list of the document taxonomy and its extracted smart features:

| Document Type | Document Type Description | Smart Feature | Feature Description |

| Views Submission | The most common document. Submissions by Parties and observers on their views, positions, proposals, and opinions on specific negotiating points. | Key Asks | Central demands or requests formulated in the document. |

| Indication of Fixed Positions | Explicit or implicit “red lines,” i.e., non-negotiable positions or conditions. | ||

| Feedback Submission | Direct feedback to programs or actors, often in response to an open consultation. | Key Recommendations | Key recommendations for action resulting from the analysis. |

| Key Policies and Programmes mentioned | Most important policies, strategies, or programs mentioned. | ||

| Report Submission | Reports providing specific data, case studies, best practices, or methodological details on a topic. | Actionable Suggestions | Concrete and actionable suggestions for improvement. |

| Target of Feedback | The specific person, department, agreement, or process to which the feedback refers. | ||

| Plenary Statement | Official speeches and statements made during the opening or closing plenary sessions. | Key Speaking Points | Main messages and key arguments of the speech. |

| Theme | Overarching theme or main topic of the statement. | ||

| Unsolicited Submission | A general category for voluntary submissions that are not in response to a specific call or mandate, often for general policy statements. | Key Message | Central concern that the submitting party wishes to convey. |

In addition to the “clip to clipboard” function of the individual smart feature outcomes, the user also has the “export” function that prepares the results for saving in a .xlsx file.

Position Comparison: Within the frame of the previous selected country submission of the Submission Browser or in the menu on top of the website, the user has the option to compare submissions within a selected issue. The AI tool places the key insights of the enhanced metadata next to each other to compare the documents on one combined view. If the user scrolls down towards the end of the frame, an AI-automated analysis extracts key asks and fixed positions and uses these to indicate potential alignment. This assessment is carried out using predefined criteria, which are divided into a multi-stage process.

In a first step of “Assessing information quality” the model first evaluates the quality of information of each submission across both Key Asks and Fixed Positions. Quality is rated as high, moderate, low, or null based on the level of detail, specificity, and relevance to COP30 themes. In a second step “Detecting contradictions” the model assesses whether one party’s positions contradict the other’s. For example, this is conducted by a conflicting type assessment, like “direct negation (require vs. prohibit), mutually exclusive requirements, quantitative conflicts (e.g., ≥$100B vs. ≤$50B) or actor/temporal/mechanism conflicts. If contradictions are detected, the parties are classified as “Potentially Not Aligned.” Otherwise, the model compares the parties’ key insights in a third step “Measuring similarity of Key Asks” across five analytical dimensions: Goals, Measures, Timelines, Sectors, and Finance.

We want to mention that the function of an “Indication of Alignment” is only focused on the content provided by the underlying document – it does not reflect the overall, unspoken or more complex position of a party towards a specific topic. This tool should be used as a starting point towards further investigation and not as a finalized answer.

Portal Chat: The third feature allows users to ask full-text questions which are then answered by the AI using information from the submission documents. The answers can be improved by searching for a specific issue and selecting concrete documents to use or by filtering the documents by type, session, submitter, type of party or thematic area. The source documents, that were used for generating the response, are linked for convenient verification. The underlying technology is a RAG-pipeline which before answering the user’s question receives only the documents which are needed to answer the given free semantic question.

The tool best functions as an in-depth knowledge query tool to understand the “why” or the “rationale” behind a strong position for instance. As an example, you could ask “Why is the selected country firmly opposed to counting loans at market rate as climate finance?” and would get a narrative and explanation as an answer – but only if the documents contain the information.

Screenshots of the different components. Left: Submissions found by the Submission Explorer via some filter selections. Right: Answer for an example query about technology transfer and capacity-building without previous document selection.

The platform is fully public and free to use – designed as digital public good to be available to all delegations. No login is required, and no personal information, queries or interactions will be tracked. The tool is hosted with IONOS on a server in Germany, follows EU data privacy and AI regulations and is completely GDPR compliant. Additionally, the servers are run 100% on renewable energy, which makes the hosting of the tool completely eco-friendly.

We are grateful to all the users who participated in testing and are pleased to have achieved a robust evaluation of our results.

Try NegotiateCOP for yourself: www.negotiatecop.org

Learnings from NegotiateAI & the Whole-of-Government Approach

Building on the GIZ Data Lab’s success with the prototype NegotiateAI, the idea of creating a tool to support under-resourced delegations in complex multilateral negotiations was successfully scaled and further developed in collaboration with a broader network of project partners.

Valuable user feedback, together with our first-hand insights gathered on the ground during the INC meetings of the UN Plastics Treaty in Geneva and Busan, helped us refine the concept and avoid common pitfalls we encountered in developing AI tools for negotiation contexts.

This next step was realized through close cooperation between the data labs of the Federal Ministry for Economic Cooperation and Development (BMZ), the Federal Foreign Office (AA), and the Federal Ministry for the Environment, Nature Conservation, Nuclear Safety and Consumer Protection (BMUKN) – as described as Whole-of-Government effort.

We are proud to present this new and improved version, implemented together with the public-sector consultancy PD, and enriched by the outstanding advocacy and expertise of CEMUNE, particularly regarding UNFCCC negotiation procedures and evaluation.

If you have questions or feedback, we are dependent on your comments and reactions to improve the tool and address bugs – please do not hesitate to reach out: datalab@giz.de