Introduction

Artificial intelligence is increasingly being integrated into professional workflows and organisational processes. However, as the industry boasts a myriad of model options, it has become difficult to know what individual models are capable of. Is there one specific AI that best suits my personal or professional needs?

AI benchmarks have become an important way to put different models and their capabilities to the test. The following article lays out the basic facts on AI benchmarks, followed by a deeper dive into specific examples. We hope to enable more informed decision-making processes about which models to deploy in your work as well as some insider knowledge for the next water fountain conversation.

What are benchmarks?

AI benchmarks serve as standardised evaluation frameworks that measure and test an AI model’s performance. The formats of these tests vary considerably. Some benchmarks are structured as multiple-choice tests. Others evaluate an AI’s ability to complete a specific task or examine the quality of text responses to a set series of questions. Generally, however, benchmarks operate in a straightforward manner: a model is given a task that it must complete. The model’s performance is then evaluated according to a certain metric. Depending on how close the AI’s output resembles the expected solution, a score is generated, typically ranging between 0 and 100, with 100 being the best.

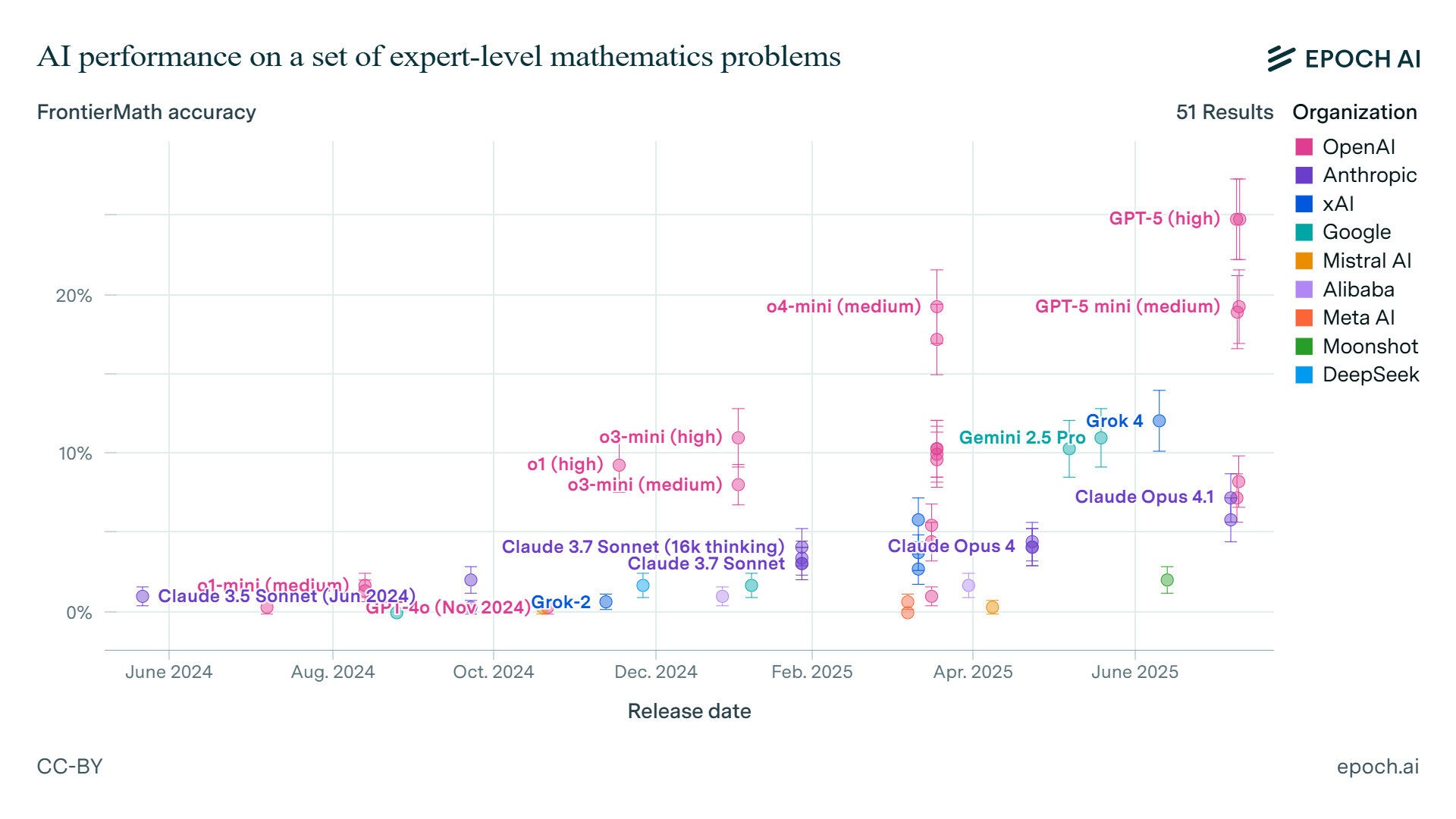

These scores are published on benchmarking leaderboards that rank and compare the results of different models on the same test like Epoch AI.

Image 1: ‘AI Benchmarking Hub’. Retrieved from https://epoch.ai/benchmarks[online resource]. Accessed 4 Sep 2025.

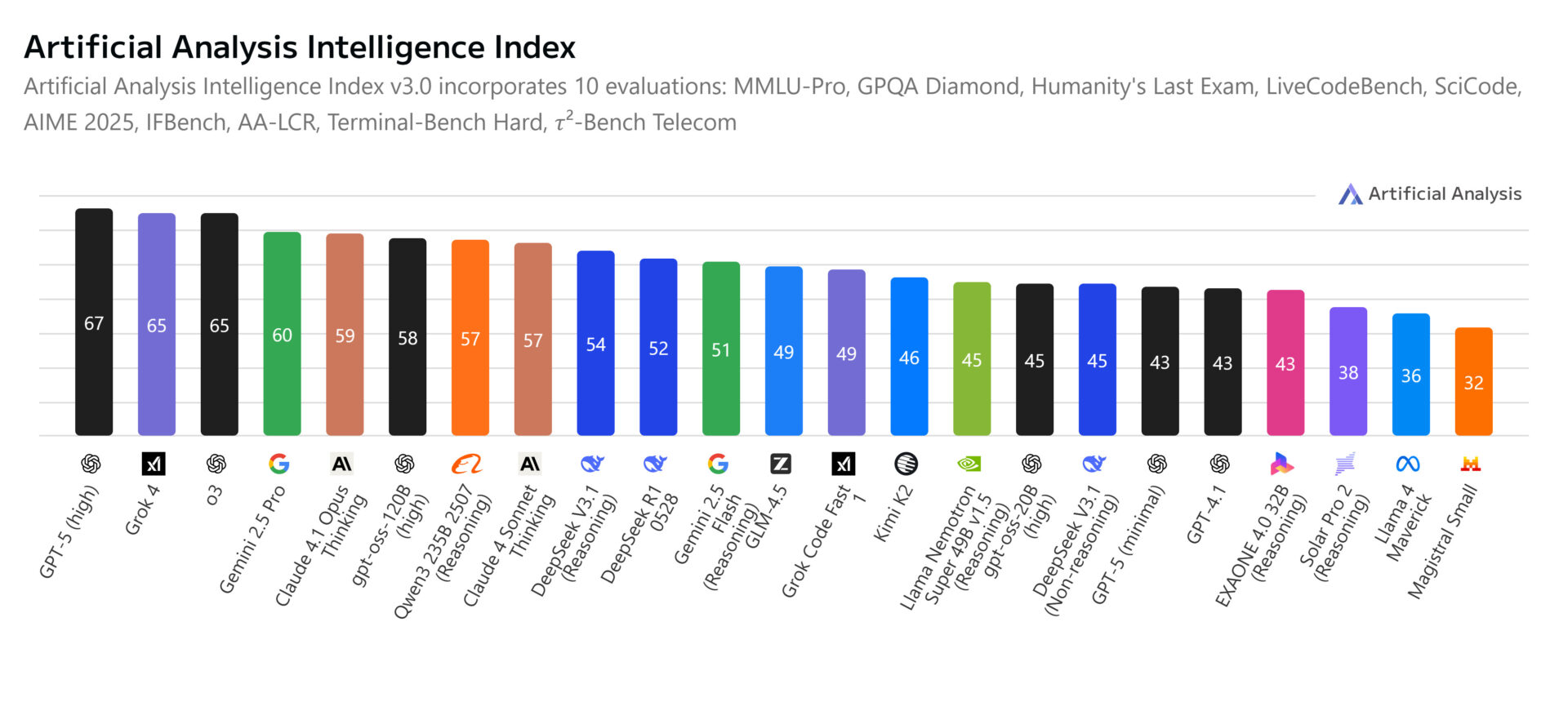

Independent sources, such as the Artificial Analysis leaderboard provide a wider overview of benchmark scores across multiple evaluations.

Image 2: Retrieved from https://artificialanalysis.ai/models.

These rankings have become instrumental in guiding decisions regarding AI model selection, deployment but also regulation.

How do different models score?

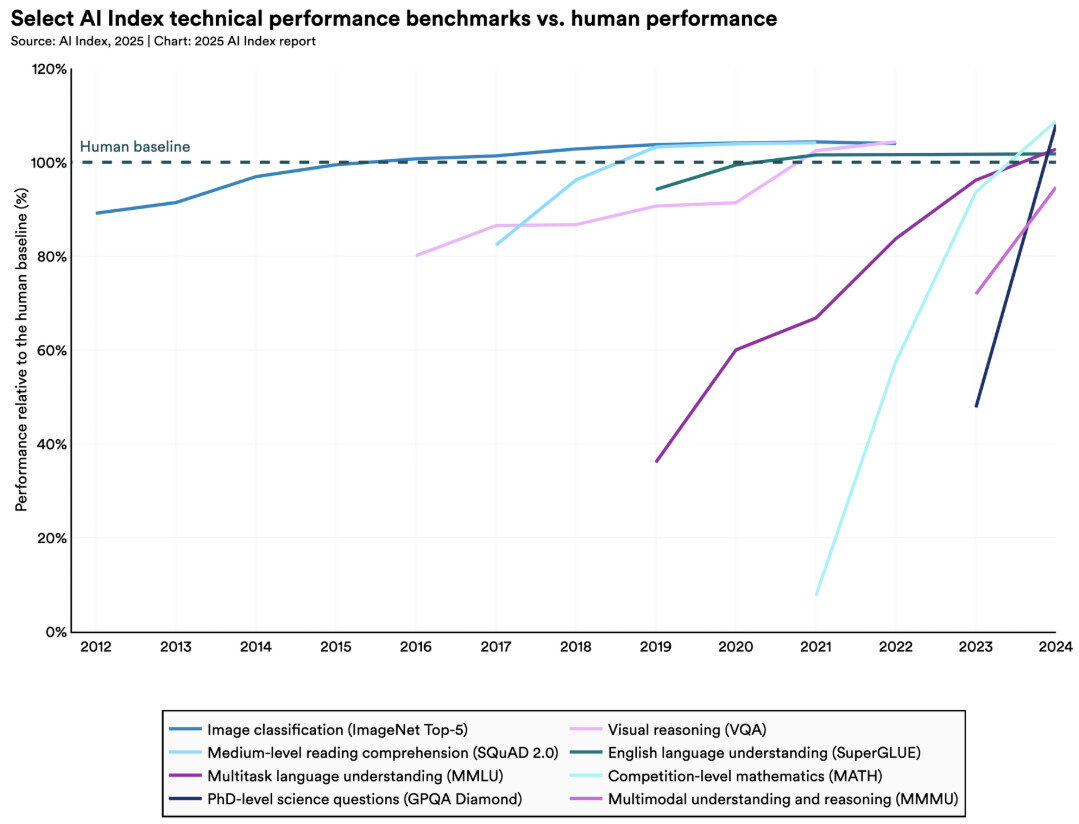

Performance varies significantly across different AI models and benchmarks. While we won’t dive into all the specific numbers here (they change frequently!), it’s helpful to understand that model performance is typically measured against a “human baseline” – the average score a human would achieve on the same test.

Image 3: Retrieved from https://hai.stanford.edu/ai-index/2025-ai-index-report/technical-performance.

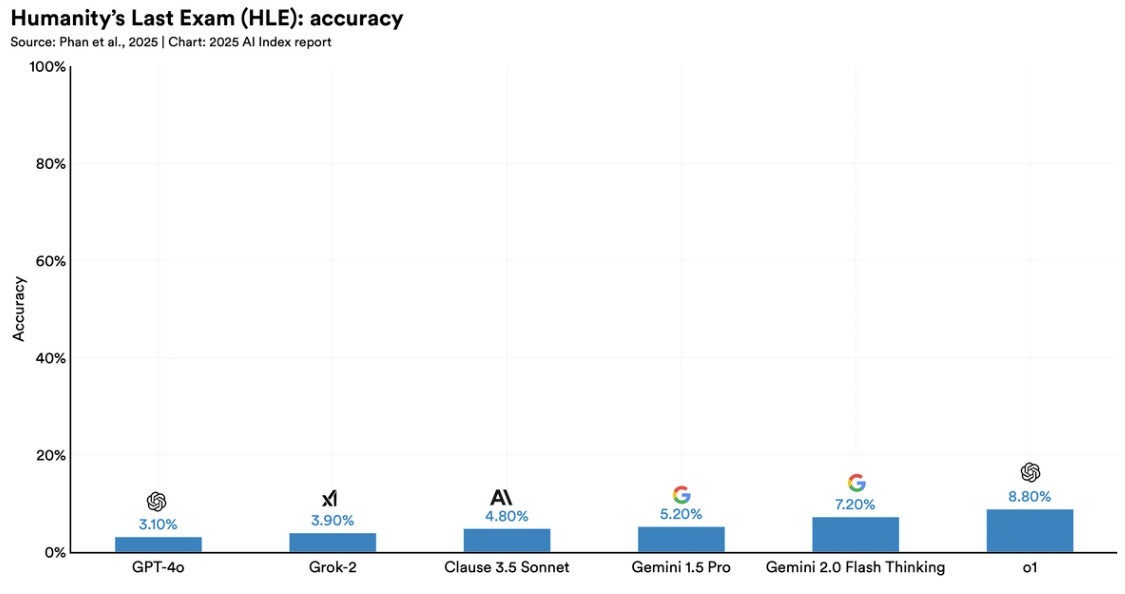

As of 2025, performance on benchmarks has increased drastically, with some models said to outperform humans in specific tasks with limited time. Other tests however still show the limitations of AI in domains such as answering PhD-level questions (see HLE benchmark).

Image 4: Retrieved from https://hai.stanford.edu/ai-index/2025-ai-index-report/technical-performance.

Recent findings have also shown a significant variation in performance of the same model when hosted on different infrastructures. Testing the same model for the AIME Math benchmark (see glossary) across different providers resulted in a variation of up to 15 points in benchmark scores (see more here). Surprisingly, the major cloud providers of the industry were the ones falling behind smaller, specialised infrastructure providers in recent tests.

What do benchmarks evaluate?

Benchmarks attempt to measure certain capabilities within a model and compare them to human skills. The illusion is therefore close that this is a way to measure intelligence, but most of these benchmarks do not employ a clear definition of intelligence. They rather measure capabilities on certain tasks such as math problems, coding or natural language processing. For a deeper dive into the capabilities that specific benchmarks test for, consult our benchmark glossary at the end of this post.

What are the challenges in benchmark evaluation?

Despite their utility, AI benchmarks are facing several limitations and honest critique. Currently, the biggest challenge is benchmark saturation – models perform so well on existing tests that benchmarks can no longer effectively indicate capability and performance.

Additionally, the push towards generality in AI models has made their evaluation even more difficult, as models no longer complete specific single tasks and thus require sophisticated evaluations that cover the full spectrum of capabilities without losing the according depth.

Furthermore, proprietary models are constantly competing on the market for benchmark scores, advancements and users, which lead developers to optimise their models for respected benchmarks by training them with the same data set as the benchmark. The resulting problem is known as an ‘overfit’, where a model performs well on test data but struggles to deal with real-world data.

Conclusion

AI benchmarks can provide a valuable evaluation of model capabilities, yet their interpretation requires careful consideration of both strengths and limitations. Challenges such as saturation and overfitting should remind us that scores alone cannot drive decision-making processes even though they can be a useful starting point. As you consider implementing AI solutions in your work, critical questions should guide the process:

What is the specific capability I expect from an AI model?

Which existing benchmarks validly reflect the capabilities I am looking for?

Is the benchmark score a valid reflection of the model’s sought-after capabilities?

What factors other than task-specific capability am I looking for (infrastructure demands, ethical standards etc.) and how do I balance them?

Ultimately, we want to encourage the continued critical examination of AI’s development and potential capabilities for you who is seeking to integrate AI into their work. The Data Lab is happy to exchange about this over a casual coffee or as part of a potential experiment.

Out to prove you can still beat AI?

We highly recommend having a look at the ARC-AGI benchmark. Designed to be the first benchmark testing for Artificial General Intelligence (AGI), ARC-AGI targets the precise difference between general intelligence and skill. In other words – the benchmark is easy for humans but hard for AI. See for yourself!

A deeper dive

The current benchmark landscape is incredibly comprehensive, making it impossible to always be informed about every evaluation out there.

Some useful leaderboards can be found through the following links:

https://artificialanalysis.ai/leaderboards/models

https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard#/?official=true

To get a rough understanding of the specific capabilities benchmarks test for, we have created a small glossary of current key evaluations alongside a brief description of what they evaluate:

Massive Multitask Language Understanding (MMLU) is one of the most comprehensive general knowledge assessments, comprising over 15,000 multiple-choice questions across 57 academic subjects. It evaluates the breadth of an LLM’s knowledge, the depth of its natural language understanding and its ability to solve problems based on gained knowledge. Most Large Language Models (LLMs) meanwhile achieve scores over 88% on the MMLU benchmark, which is why, as of 2025, the test is slowly being phased out.

Humanity’s Last Exam (HLE) tests Large Language Models (LLMs) on 2,500 PhD-level questions across a broad subject range. HLE was designed to the final closed-ended academic benchmark of its kind is seriously challenging the high scores of over 90% that LLMs are currently receiving on benchmarks such as the MMLU.

GPQA Diamond is a challenging data set of 448 multiple-choice questions on the topics of biology, physics, and chemistry. PhD experts achieve 65% accuracy whilst skilled non-experts only reach 34% despite access to the web.

SuperGLUE is a multitask benchmarking tool for evaluating, analysing and comparing the natural language understanding of LLMs.

MMMU is a benchmark designed to evaluate multimodal models on massive multi-discipline tasks demanding college-level subject knowledge and deliberate reasoning. The exam includes 11.5 thousand questions covering six core disciplines and measures three essential skills: perception, knowledge and reasoning.

SWE-Benchmark evaluates a model’s coding skills using more than 2,000 real-world programming problems pulled from the GitHub repositories of 12 different Python-based projects.

Grade School Math 8K (GSM8K) evaluates performance of models on arithmetic word problems, specifically chain-of-thought reasoning.

American Invitational Mathematics Examination (AIME) is a real-life invite-only mathematics competition for high-school students involving 15 questions that increase in difficulty. Current benchmark results have shown that no individual model has completely mastered the test, however there are already several high scores achieved.